We’re at a weird moment with AI. It’s getting more powerful every day, promising to change how we work and solve problems. But here’s the thing: the way most of us interact with AI, through those chat windows, might actually be holding it back.

I know, I know. That sounds backwards. Chat interfaces are everywhere. ChatGPT, Copilot, Claude. They all use chat. It’s become the default way we think about talking to AI. But what if that’s the problem?

This isn’t just about what feels nicer to use. It’s about whether AI actually becomes useful in our daily work, or stays stuck as a novelty we occasionally remember to check.

The Problem with Chat

Let me start with something personal: I prefer dictation to typing. For me, typing out a question is friction. It’s extra work. And here’s what I’ve learned: AI works best when there’s no friction at all.

Think about it this way. When you’re using an app you know well, like your email or your calendar, you don’t stop to think about how to use it. You just… use it. The interface disappears. That’s what good design does.

But chat windows? They make you stop. They make you think about how to phrase your question. They make you switch contexts, from whatever you were doing to “now I’m talking to the AI.” That’s friction. And friction kills adoption.

The industry is already moving beyond text-only chat. We’re seeing AI that can understand images, video, and audio. That’s progress. But we’re still stuck thinking chat is the answer to everything.

Here’s what I think happened: Chat became popular because it was easy to build. Developers could quickly show off what Large Language Models (LLMs), which basically means AI that understands and generates text, could do. It felt familiar, like texting. So it stuck.

But just because something’s familiar doesn’t mean it’s the best way to do things. We’ve spent decades learning how to design good interfaces. Why throw all that knowledge away?

The Real Cost of Chat-First Thinking

This isn’t just a design preference. It has real costs.

For the people building AI tools: If you think chat is the only way to deliver AI, you’re limiting yourself. You might build a chatbot when what people really need is AI built into the workflow they already use. You’re solving the wrong problem.

For the people using AI: If you only see AI through chat windows, you’re missing what it could actually do for you. You might think “AI is cool but I don’t have time to chat with it.” And you’d be right. But that’s not AI’s fault. It’s the interface’s fault.

For organizations: Companies invest in AI, but if it’s only available through chat interfaces that feel like extra work, adoption fails. People take the path of least resistance. If AI isn’t on that path, they’ll skip it.

We’re also seeing AI evolve into something more sophisticated, what people are calling “agents.” These are AI systems that can do multi-step tasks on their own, even working with other AI agents. Try managing that complexity through a chat window. It’s like trying to conduct an orchestra with a single drum. The interface doesn’t match what the technology can do.

The Copilot Myth

A lot of people think the solution is just putting chat closer to where work happens. Like having a Copilot sidebar in your application. That’s better than a standalone ChatGPT window, sure. But it’s still missing the point.

True integration isn’t about proximity. It’s about embedding intelligence directly into what you’re already doing.

Let me give you an example. Say you’re using a cloud platform, and there’s a chat window for budget questions. The system already has your data. It knows common budget mistakes. It can see when you’re about to hit a spending limit.

So why should you have to ask? Why should you have to type “Hey, am I about to go over budget?” when the system could just… tell you? Or better yet, prevent the problem before it happens?

That’s what I mean by proactive intelligence. The AI uses its access to your data to anticipate what you need, instead of waiting for you to ask. The right interface doesn’t ask for input. It removes the need for input.

What I’ve Learned from Power Platform

I’ve seen this play out in real organizations. Teams that relied heavily on chatbots (like Power Virtual Agents, now called Copilot Studio) for internal services saw a huge jump in usage when they moved to native application interfaces (like Power Apps).

People didn’t want to chat with a service. They wanted to do work through interfaces designed for the task. Once someone in leadership gets frustrated with clunky chat experiences and decides “AI is all hype,” that door closes for the whole organization.

AI is a Helper, Not a Destination

Here’s a fundamental misunderstanding: People often treat AI like it’s the destination. Like the goal is to interact with AI.

But AI isn’t the destination. It’s the helper. It’s the tool that gets you to your actual goal faster and better.

The interface should reflect that. It should fade into the background, not demand that you adapt to its conversational quirks.

Chat became the default not because it was the best user experience, but because it was a straightforward way to show off what LLMs could do. It’s a developer shortcut that doesn’t always translate to a user-first experience.

And honestly? It can be limiting for developers too. Instead of embedding AI into existing, stable applications, developers might build standalone chat apps. That increases complexity and time-to-value. It’s often cheaper and less risky to add AI as a feature enhancement within something that already works, rather than building an entirely new application around chat.

The Human + AI Thing

I believe this: A person plus AI will always be better than either one alone. But if AI interfaces are clunky, organizations might wrongly conclude that “human plus robot isn’t as good as just the robot.” That leads to dangerous pushes for full automation where human oversight is critical.

On the flip side, if users reject AI because the interfaces are frustrating, they stay overworked. They’re doing tasks that AI could handle, but the interface barrier keeps them from getting that help.

This is fundamentally a leadership challenge. We need to guide our teams to think beyond what’s immediately obvious.

What to Do Instead

So if chat isn’t the answer, what is?

1. Think integration first, not chat first. Instead of asking “How can we build a chatbot for this?” ask “How can AI be invisibly embedded to make this workflow better?”

2. Do real UX research. Don’t assume chat is the answer. Understand what people are actually trying to accomplish. Then design for that.

3. Build proactive intelligence. Create AI systems that anticipate needs and offer help at the right moment, rather than waiting for questions.

4. Educate your team. Show them examples of AI that’s deeply embedded, context-aware, and doesn’t require chat. Broaden their understanding of what an “AI interface” can be.

5. Measure what matters. Don’t count chat sessions or queries. Measure whether AI helps people complete tasks faster, make better decisions, and reduce effort.

A Practical Path Forward

Here’s how you might actually do this:

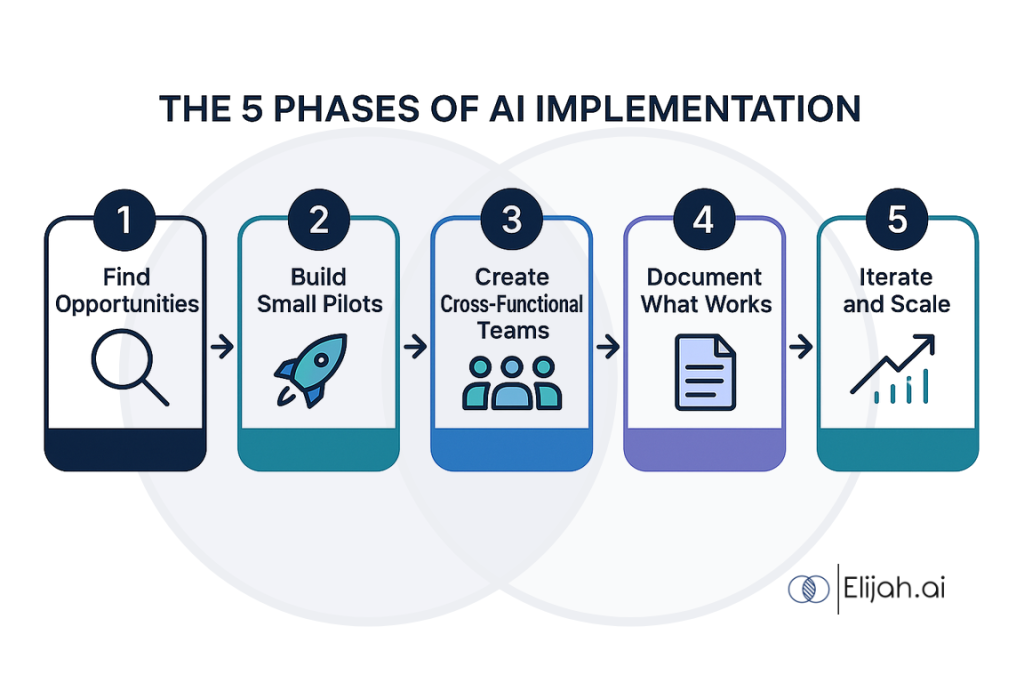

Phase 1: Find the opportunities (1-3 months)

Look at your existing applications and workflows. Where are the friction points? Where could AI help proactively, without requiring someone to ask?

Phase 2: Build small pilots (3-6 months)

Pick one or two high-impact opportunities. Build AI features that are deeply embedded in the existing interface. For example, instead of a budget chatbot, build an AI feature that flags budget anomalies directly in your financial dashboard.

Phase 3: Create cross-functional teams (ongoing)

Bring together UX designers, AI engineers, product managers, and people who actually use the tools. Give them permission to challenge chat-first assumptions.

Phase 4: Document what works (6-12 months)

When something works, codify it. Create a library of patterns for frictionless AI features. Share these successes internally.

Phase 5: Iterate and scale (ongoing)

Gather feedback. Measure success by task completion and user satisfaction, not chat engagement. Refine and scale what works.

The goal is simple: Make AI an enhancement to how work gets done, not an additional task.

The Bottom Line

Chat windows served a purpose. They introduced us to what conversational AI could do. But they’re not the foundation for how AI will actually deliver value.

The future of AI is seamless integration. It’s proactive assistance. It’s interfaces so intuitive they fade into the background, letting human capability take center stage, powerfully augmented.

This requires challenging assumptions, investing in thoughtful design, and prioritizing real utility over novelty. The organizations that get this will actually harness AI’s transformative power.

The rest? They’ll be stuck wondering why their expensive AI investments aren’t paying off.

Here’s my question for you: What does your interface say about how you define intelligence?

If it says “intelligence is something you chat with,” you might be missing the point. Intelligence should be woven into the fabric of how you work. Invisible. Indispensable. Frictionless.

That’s the future. And honestly? It can’t come soon enough.

I don’t even know how I ended up here, buut I thought this post was great.

I don’t know who you are but certainly you’re going to a famous blogger if you are

not already ;) Cheers! https://truepharm.org/